First off, I’m going to tell you that Microsoft SQL Server SSIS is THE MOST INANE, FRUSTRATING TOOL I’VE ENCOUNTERED IN OVER 2 DECADES OF SOFTWARE ENGINEERING! It’s designed for the point-and-click query builder, and that makes it really difficult to use for real engineers that program code, not click buttons. I really do detest it’s design and mish-mash of concepts, and most of all it’s horrible documentation.

THAT SAID… I’m here to offer a little bit of help.

TODAY’s TASK – Using SSIS Package Variables in a useful way.

What I needed to accomplish today was the dynamic setting of a database’s compatibility level, based on it’s current level. (clear enough?)

The reason for this? MS SQL server 2005 supports the PIVOT operation (why do I feel like the spreadsheet coders designed this crazy thing?), but SQL 2000 does not. No big deal, EXCEPT when it comes to using a database that once lived on an SQL 2000 platform and now lives on a 2005 platform. Buried deep in the bowls of the settings is a flag that says “I know I’m running on a more modern server, but I refuse to honor all of it’s capabilities.. I’m just going to be a whiny pain in the ass until you tell me to stop”.

So, that’s what this task is all about…

Dynamically Detecting an SQL Server run-level of 80, and bumping it up to 90 to support the features of the server it’s running on

Crazy concept, I realize that. HOWEVER, the hitch in this deal is that when I am done using these PIVOT command (I’m just fixing a kludge written by someone else, I’ve never have used PIVOT, but that’s for another day.. next thing you know someone’s going to be to try to sell me on the virtues of File Maker! GAK!), I need to set the DB run level back down to the 2000 setting so OTHER code can run with out blowing up.

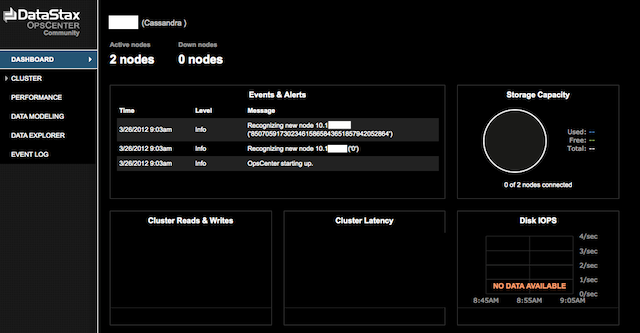

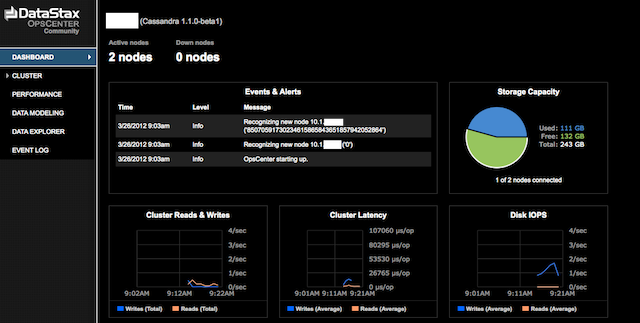

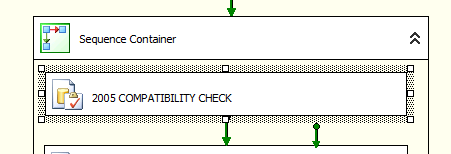

Getting to the task, using an SSIS SQL Execute Task

First things first, you need to create an SQL Execute Task in your SQL package. I did this inside a sequence container to clearly define what the application of this was confined too within the broader scope of the package. Here’s a screen shot:

Next is planning and writing the code to check the compatibility level, and store it in a package variable. However the only way I could find to really do this, outside of using a Script task and programming in C# (last thing I really want to dig into right now), was returning a row from the query, and setting that BACK into a pre-defined package variable.

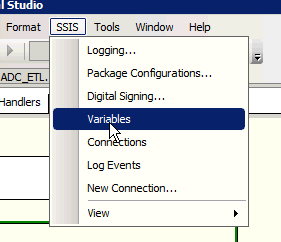

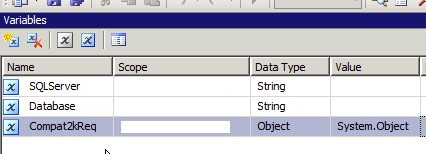

So let’s move to defining that package variable next… this is an important step that took me several hour to find any useful information on, and to use. It’s important that use use the nutty Micro$oft syntax to define the variable (why isn’t that point-and-click too, right?).

You need to go to your SSIS menu item and select ‘Variables’

Now define your SSIS package variable. I used the name

- Compat2kReq

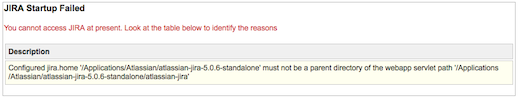

UPDATE: What I have later found is that you MUST set the data type to OBJECT for the value to be properly set. IF you do not do that, a wonderful, inexplicable and generally BS message is presented to you:

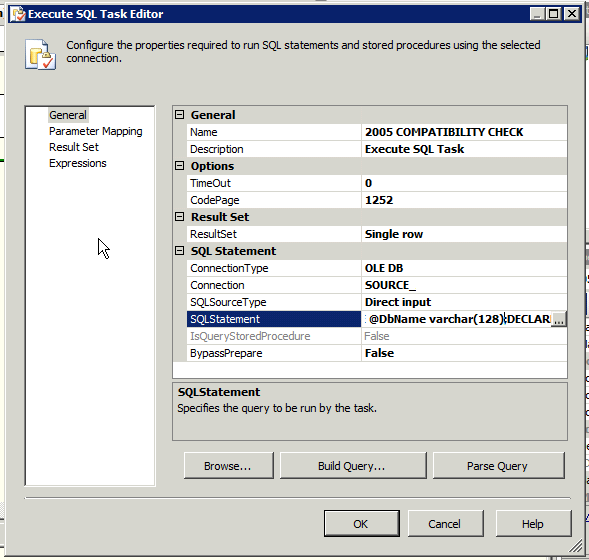

Getting back on task… EDIT the SQL Execute Task, click on the SQLStatement until you see the elipsis […] to your right of the cell, you’ll need to then click on that to open the SQL editor….

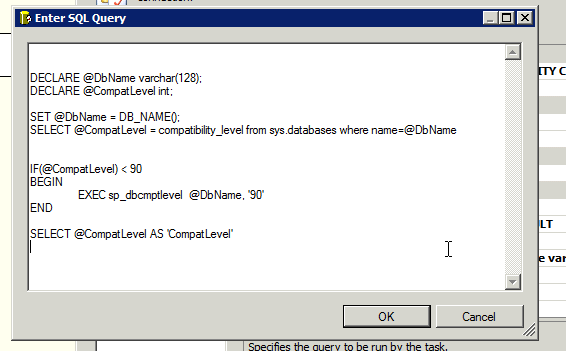

With the dialog open, I inserted the following code. I’ll go over what it all means next.

DECLARE @DbName varchar(128);

DECLARE @CompatLevel int;SET @DbName = DB_NAME();

SELECT @CompatLevel = compatibility_level from sys.databases where name=@DbNameIF(@CompatLevel) < 90 BEGIN EXEC sp_dbcmptlevel @DbName, '90' END SELECT @CompatLevel AS 'CompatLevel'

First section is the declaration of two local variables.

DECLARE @DbName varchar(128); -- will store the name of database

DECLARE @CompatLevel int; -- will store the starting compatibility level

Set the values next. The first operation get's the current compatibility level. For an SQL2000 server, that value is '80'. To use the PIVOT function, it must be '90' or higher. We'll get the that, after this.

SET @DbName = DB_NAME(); -- dynamically store DB_NAME() into var, seems like a redundant step but some of the MS SQL functions won't actually execute the function, such as ALTER DATABASE, so you have to play fun tricks with MS SQL

SELECT @CompatLevel = compatibility_level from sys.databases where name=@DbName -- now I set the current compatibility level into a local var. I might have been able to do this another way, but mapping the variable, as you'll see later was the fastest way to solve this problem.

Next, test to see if the level is high enough, and if not, bump it up to the minimum required, which is 90. Level 90 indicates SQL2005.

IF(@CompatLevel) < 90 -- see if the level is less than required 90.

BEGIN

EXEC sp_dbcmptlevel @DbName, '90' -- set to required level, 90

END

The final step, and this is crucial to detecting this change, and resetting it later, is to save the ORIGINAL compatibility level in the package variable for later use. Returning it as a single row ResultSet is how I solved this for the Hot-Patch I was tasked with creating.

SELECT @CompatLevel AS 'CompatLevel' -- select variable as a result row.

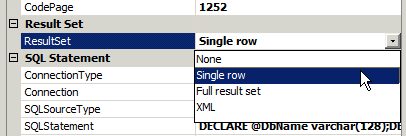

Now, before heading into the next area, I'm going to direct you to the General pane again and point out a second important attribute to set, The ResultSet value. I'm only returning a single row so that is how I set it. You will need to do this, by default there is no return.

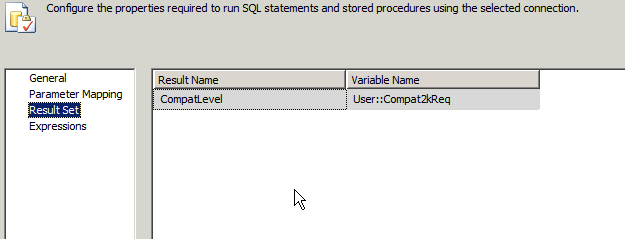

Now that part 1 is done, next is to define that the Package Variable we set up early in this task, is going to be an OUTPUT from the SQL Execute Task we're working on.

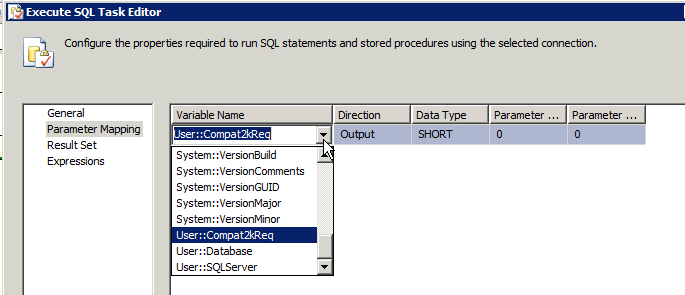

Click on the 'Parameter Mapping' item and you'll see an empty table (unless you already added something before).

Use the 'ADD' button to create an entry. There you'll select the Package variable to reference. You'll also want to define it's type (I used SHORT) and if it's INPUT or OUTPUT. I'm using OUTPUT, meaning the SQL Task will OUTPUT a value that will be stored into this variable.

I set a parameter sequence of 0 (meaning it's the first) and an initial value of '0'.

The final step in this phase, is to set your Result Set up. The GUI will help you by displaying your OUTPUT paramter automatically, all you need to do is define the row name returned by your final select (here is the code again, with the row name highlighted, you can use any name you want.. I just happened to use the same name as my variable to confuse you).

SELECT @CompatLevel AS 'CompatLevel' -- result row name

So, there is it.. pretty simple to do, isn't it? I think it's crazy, but I'm not an M$ fanboy so... just keep that in mind.

If you have questions, leave a comment. I can't guarantee I'll be of any help, but if I can, I'll do my best.

I hoe this was useful for you. I spent several hours pouring over docs and web pages and never really found a one-stop explanation, so I wrote this one up, because I sure home I don't forget!